Attached is some code that makes use of a measure of correlation I mentioned in my first real paper on A.I. (see the definition of “symm”) that I’ve finally gotten around to coding as a standalone measure.

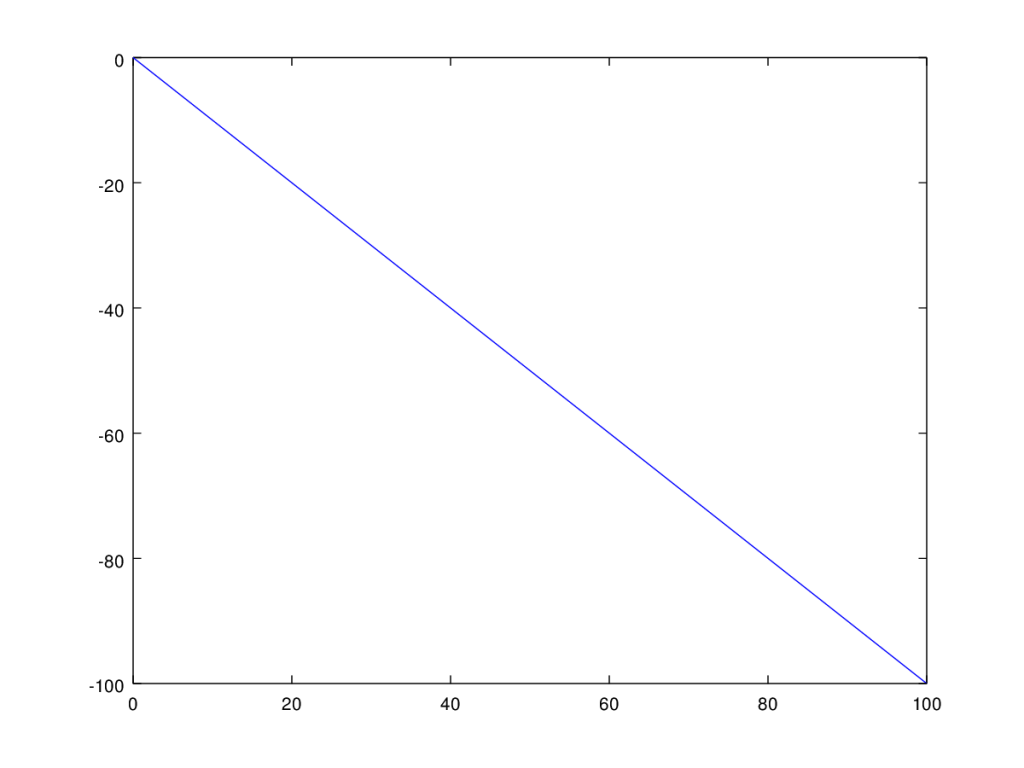

The code is annotated to explain how it works, but the basic idea is that sorting reveals information about the correlation between two vectors of numbers. For example, imagine you have a set of numbers from 1 to 100, listed in ascending order, in vector , and the numbers -1 to -100, in vector

, listed in descending order. This would produce the following plot in the

plane:

Now sort each set of numbers in ascending order, and save the resultant mappings of ordinals. For example, in the case of vector , the list is already sorted in ascending order, so the ordinals don’t change. In contrast, in the case of vector

, the list is sorted in descending order, so ordinal 1 gets mapped to the last spot, ordinal 2 gets mapped to the second to last spot, and so on. This will produce another pair of vectors that represent the mappings generated by the sorting function, which for vector

will be

, and for vector

will be

, where

is the number of items in each vector. Therefore, by taking the difference between the corresponding ordinals in

and

, we can arrive at a measure of correlation, since it tells us to what extent the values in

and

share the same ordinal relationships, which is more or less what correlation attempts to measure. This can be easily mapped to the traditional

scale, and the results are exactly what intuition suggests, which is that the example above constitutes perfect negative correlation, an increasing line constitutes perfect positive correlation, and adding noise, or changing the shape, diminishes correlation.

Because I’ve abstracted sorting using information theory, you could I suppose measure the correlation between any two ordered sets of mathematical objects.

Also attached is another script that uses basically the same method to measure correlation between numerical data and ordinal data. The specific example attached allows you to measure which dimensions in a dataset (numerical) are most relevant to driving the value of the classifier (ordinal).