I’m pleased to announce that after years of basically non-stop research and coding, I’ve completed the Free Version of my AutoML software, Black Tree (Beta 1.0). The results are astonishing, producing consistently high accuracy, with simply unprecedented runtimes, all in an easy to use GUI written in Swift for MacOS (version 10.10 or later):

| Dataset | Accuracy | Total Runtime (Seconds) |

|---|---|---|

| UCI Credit (2,500 rows) | 98.46% | 217.23 |

| UCI Ionosphere (351 rows) | 100.0% | 0.7551 |

| UCI Iris (150 rows) | 100.0% | 0.2379 |

| UCI Parksinsons (195 rows) | 100.0% | 0.3798 |

| UCI Sonar (208) | 100.0% | 0.5131 |

| UCI Wine (178) | 100.0% | 0.3100 |

| UCI Abalone (2,500 rows) | 100.0% | 10.597 |

| MNIST Fashion (2,500 images) | 97.76% | 25.562 |

| MRI Dataset (1,879 images) | 98.425% | 36.754 |

The results above were generated using Black Tree (Beta 1.0), applying the “Supervised Delta Prediction” algorithm to each of the datasets listed above, and you can download these datasets in a ready-to-use format from DropBox (UCI Datasets, MNIST Fashion, MRI Dataset).

The Free Version (Beta 1.0) is of course limited, but nonetheless generous, allowing for non-commercial classification and clustering, including image classification, for any dataset with no more than 2,500 rows / images. The Pro Version will include nearly my entire A.I. library, for just $199 per user per year, with no limit on the number of rows, allowing you to classify and cluster datasets with tens of millions of rows, in polynomial time, on a consumer device, without cloud computing or a data scientist (see Vectorized Deep Learning, generally). You can also purchase a lifetime commercial license of the Pro Version for just $1,000 per user.

INSTALLATION

You can download the executable file from the Black Tree website (11.1 MB). This can be placed anywhere you like on your machine, though upon opening the application, it will automatically create directories in “/Users/[yourname]/Documents/BlackTree”. Because of MacOS security settings, the first time you run Black Tree, you will have to right-click the application icon, and select “Open”, which should cause a notice to pop up (eventually), saying you downloaded the application from the Internet, and you want to open it anyway.

You must also download and install Octave, which is free, and available here, which will also require you to right-click the application file for security reasons (for the first time only). Then, you must install Octave’s image package (if you want to run image classification), which can be done by typing the following code into Octave’s command line:

pkg install -forge image

If for whatever reason, the package fails to download (e.g., no internet connection), then you can manually install the image package by downloading it from SourceForge, and then typing the following code into Octave’s command line:

pkg install [local_filename],

where “local_filename” is the full path and filename to the image package on your machine.

When you open Black Tree for the first time, it will automatically generate all of the necessary Octave command line code for you (including the code above), and copy it to your clipboard, so all you have to do is open Octave, and press CTRL + V to complete installation of Black Tree. Note that Black Tree will also automatically download Octave source code and sample datasets the first time you run it, so there will be some modest delay (only the first time) before you see the Black Tree GUI pop up.

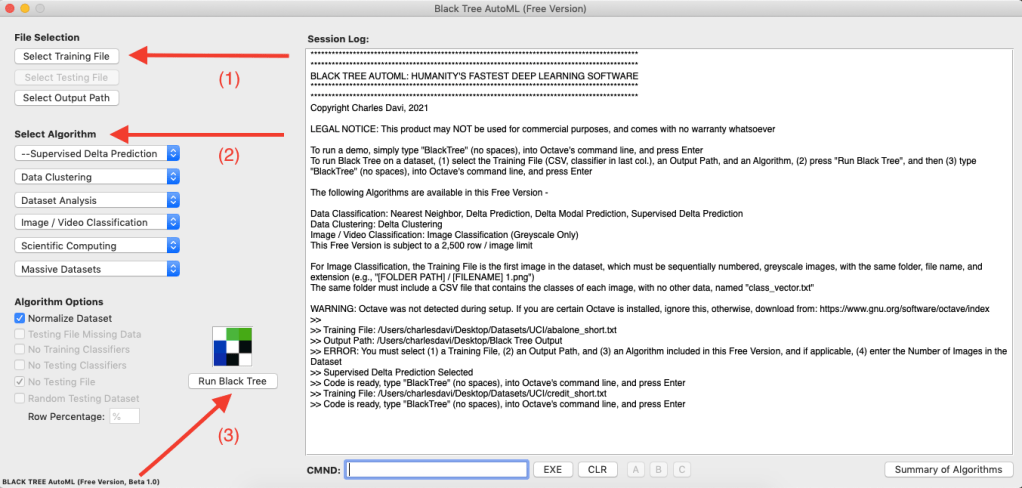

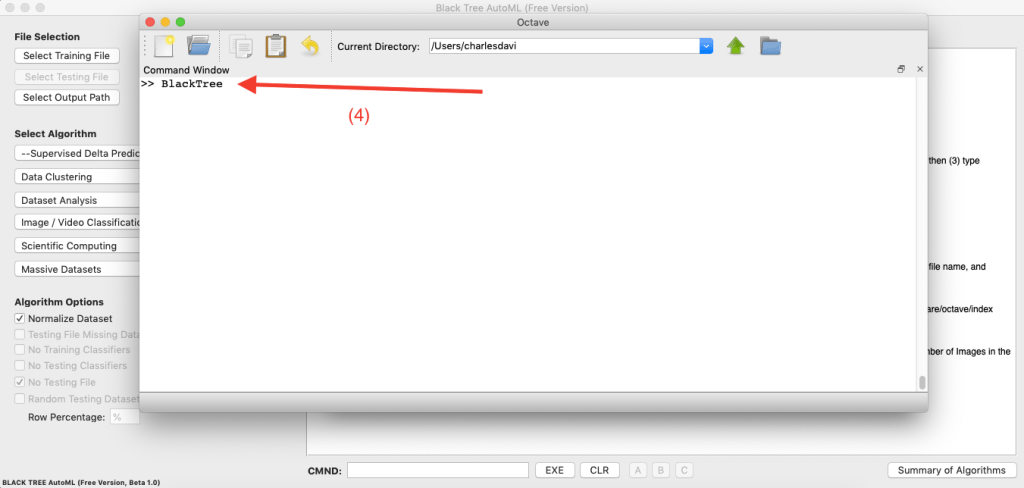

RUNNING BLACK TREE

Data Classification / Clustering

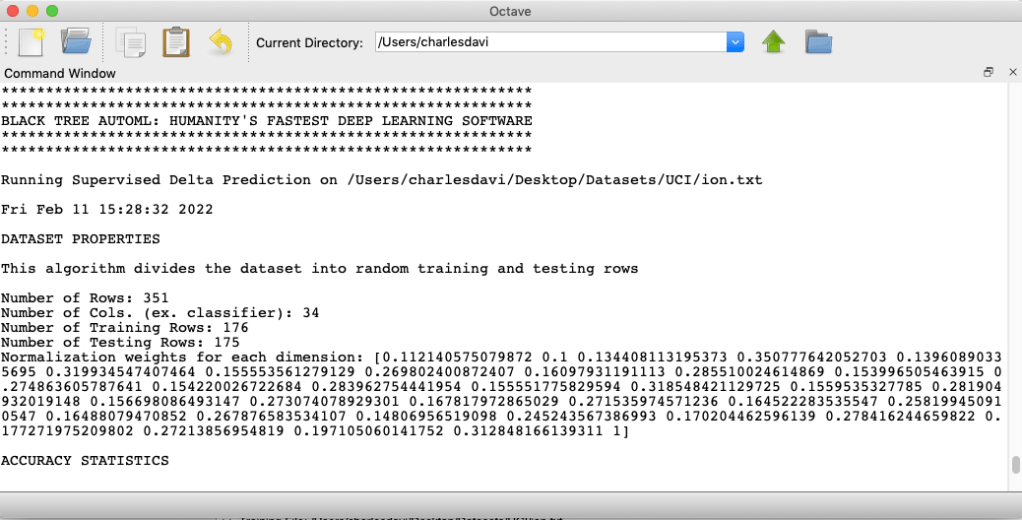

To run Data Classification / Clustering, simply (1) select a Training File and Output Path, (2) select an Algorithm, (3) press “Run Black Tree”, then (4) type “BlackTree”, no spaces, into Octave’s command line, and press Enter, that’s it. Note that the Free Version does not allow you to select both a training and testing file, and instead runs the selected algorithm on a single dataset. The exception is Supervised Delta Prediction, which automatically spilts the selected dataset exactly in half, into random training and testing datasets. This algorithm is automatically applied for Image Classification in the Free Version. The Pro Version will allow you to apply the algorithm of your choice to Image Classification problems. Note that the dataset must be an plain text, CSV file, with integer classifier labels in column

.

Image Classification

For Image Classification, select the first image in the dataset (this is the “Training File”). Black Tree will automatically load and process the entire folder of images, though they must be sequentially named files, with identical names and extensions (e.g., “MNIST_fashion1.jpg”, “MNIST_fashion2.jpg”, … ). The classifiers for the images must be in the same folder, in a plain text, CSV file named “class_vector.txt”, in order, with no other information or spacing. Then select an Output Path, select “Image Classification” as the algorithm, and enter the number of images in the dataset in CMND, and press “EXE”. Then press “Run BlackTree”, and simply type the word “BlackTree” (no spaces) into Octave’s command line, and press Enter.

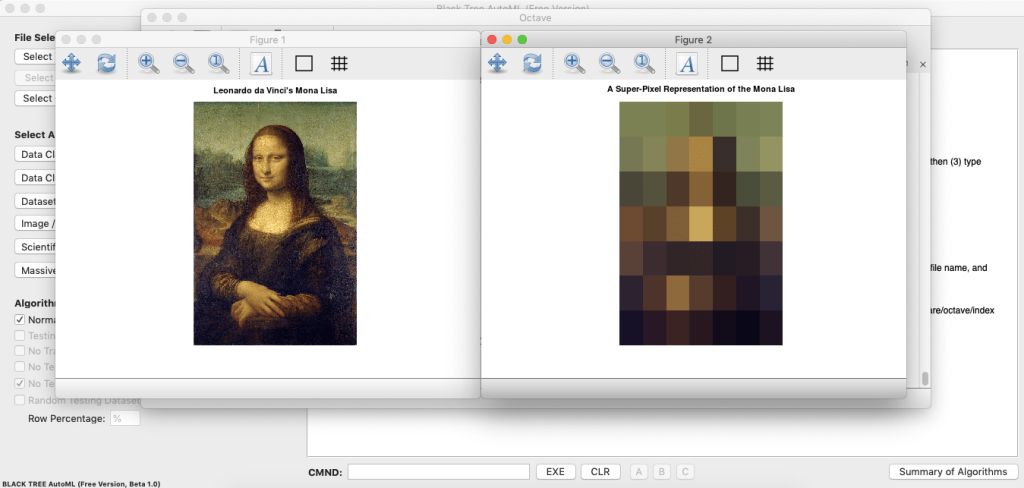

As noted, you cannot select the prediction algorithm, as it is automatically fixed to Supervised Delta Prediction for Image Classification. Moreover, you cannot select the image pre-processing algorithm, which is described in Section 1.3 of Vectorized Deep Learning, that generates super-pixel representations of each image in the dataset, thereby compressing them, allowing for extremely fast clustering and prediction. Finally, the images must be greyscale, and the dataset cannot contain more than 2,500 images. This will obviously not be the case for the Pro Version, which will include a wide variety of Image and Video Classification algorithms, with no limits on the number of rows or formats, or your ability to mix and match prediction and image pre-processing algorithms. The Free Version includes a teaser of RGB image processing, using a picture of Da Vinci’s Mona Lisa:

HOW DOES IT WORK?

The basic premise behind the software is that if a dataset is what I call locally consistent, then you can prove that the Nearest Neighbor algorithm will generate perfect accuracy (see, Analyzing Dataset Consistency, generally). In simple terms, a dataset is locally consistent if classifications don’t change over small distances. In practice, of course, datasets are not perfect, and what the algorithms attempt to do, is identify likely errors, ex ante, and “reject” them, leaving only the best predictions, which typically produces very high accuracies, and in the case of the Supervised Delta Prediction algorithm, nearly and at times actually perfect accuracies. The advantage of this approach over Neural Networks and other typical techniques, is that all of these algorithms have polynomial runtimes, and moreover, many of the processes can be executed in parallel, leading to what is at times almost instantaneous and highly accurate classifications and predictions, and in every case, radically more efficient processing than any approach to deep learning of which I am aware.

I have also developed specialized algorithms designed for thermodynamics, that can be applied to any massive Euclidean dataset, that allow for tens of millions of vectors to be classified and clustered in polynomial time (see Section 1.4 of Vectorized Deep Learning). These algorithms are of course not included in the Free Version, but will be included in the Pro Version, which again, is just $199 per user per year, or $1,000 for one user, for a lifetime commercial license. The Pro Version should be available in about a month from today.

I welcome feedback on the Free Version, particularly from Swift developers that have any insights, criticisms, tips, or questions, and you can reach me at [charles at BlackTreeAutoML dot com].

Discover more from Information Overload

Subscribe to get the latest posts sent to your email.