I’ve implemented my vectorized rendition of my original image partition algorithm, and the results are excellent, and remarkably efficient. I’ve yet to apply it to a formal classification problem, but the results seem to be just as good as my original algorithm, and the runtimes are orders of magnitude smaller than the original algorithm. This implies that it should work just as well as my original algorithm as preprocessing for image classification problems, the only difference being that it’s much more efficient.

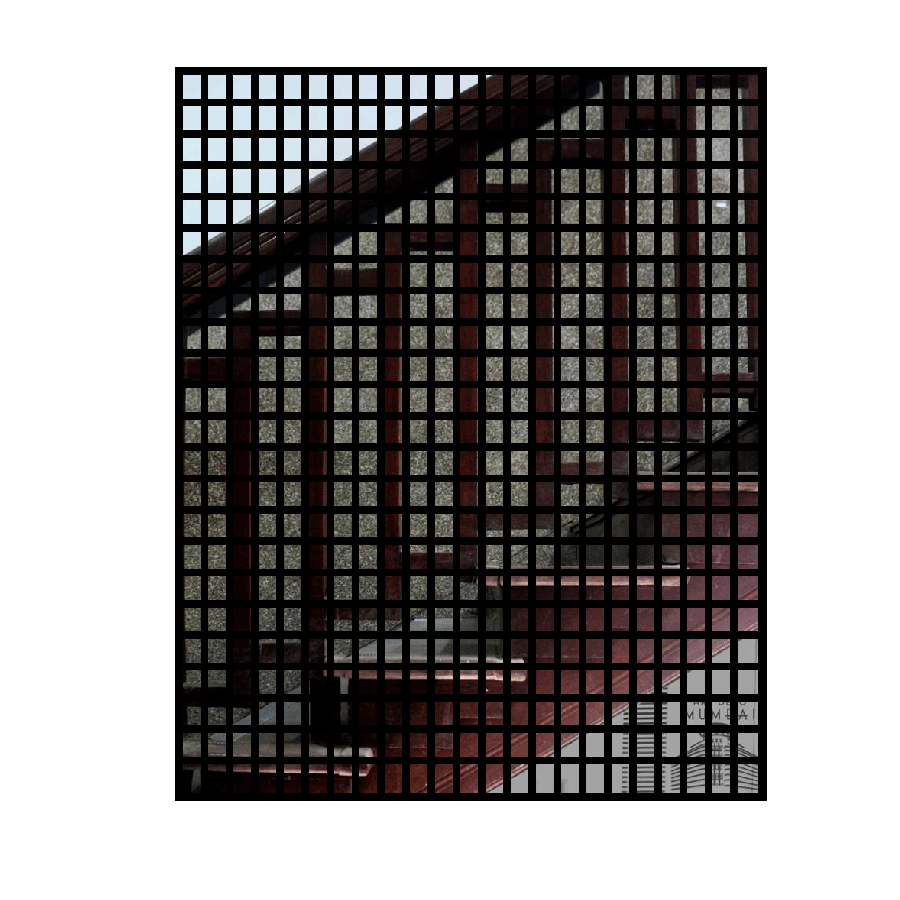

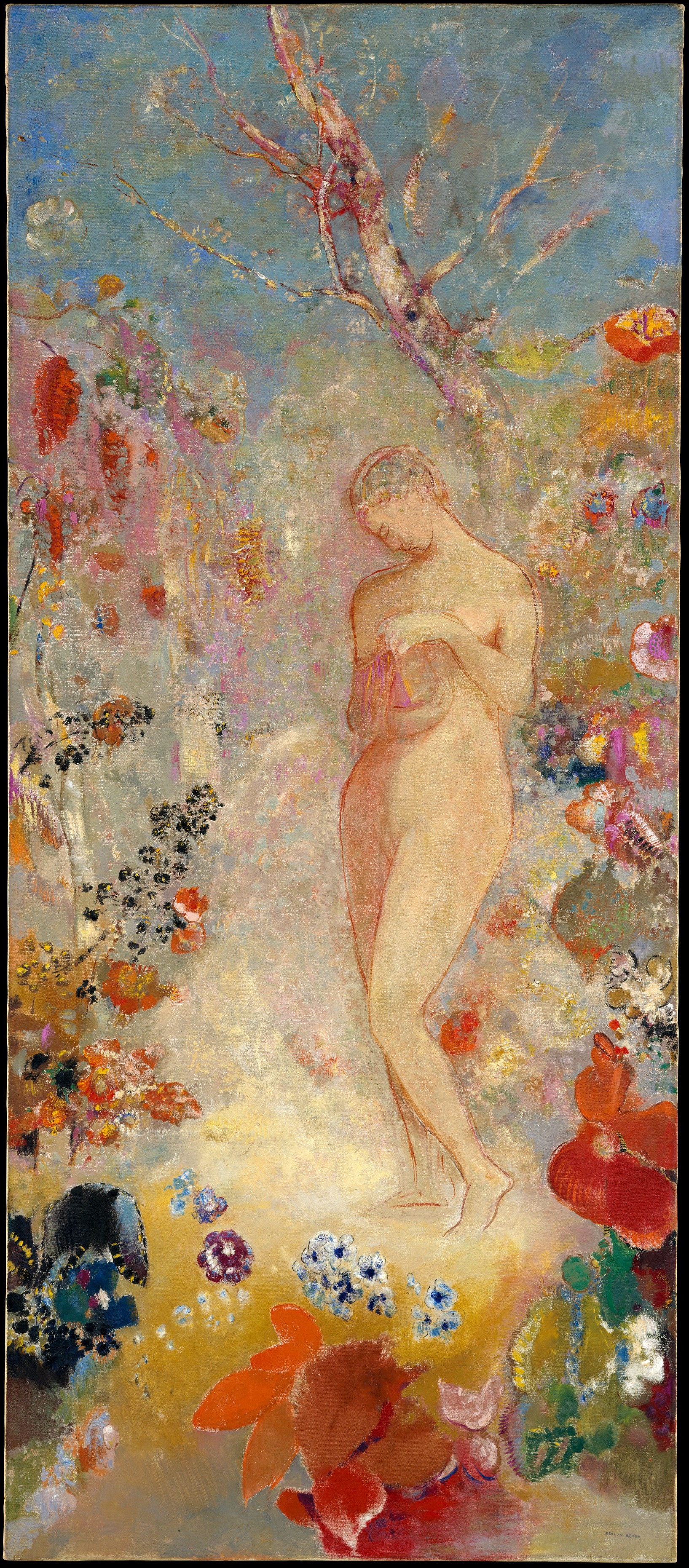

Here are some examples, which include the original image, the boundaries generated by the partition, and the average color of each partition region. The average color of each partition region would then be fed as a matrix / vector that represents the image (effectively a collection of super pixels) as the input to a classification algorithm.

Below each panel is the original image size, and the runtime of the partition algorithm, as applied to each image.

image size = 960 x 774 x 3

runtime = 0.61713 seconds

image size = 2048 x 1536 x 3

runtime = 3.1197 seconds

image size = 3722 x 1635 x 3

runtime = 7.2745 seconds

Octave Code:

Discover more from Information Overload

Subscribe to get the latest posts sent to your email.